Redesigning SudoStudy; an AI-powered Ed-tech platform, to help students study smarter

Role: Senior Product Designer

Business goals: Lift WAU, increase sessions per user and decrease first-session drop off

Business impact:

+66.7%

WAU

(from 1,280 to 2,187)

(from 1,280 to 2,187)

+27%

Sessions per user (practice mode usage)

-31%

In first-session drop-off

Tyler opens SudoStudy after class. So many subject to choose from.

None answer the only question he cares about:

what should I study next?

He clicks a few menus.

Closes the tab.

Plans to “study later.”

None answer the only question he cares about:

what should I study next?

He clicks a few menus.

Closes the tab.

Plans to “study later.”

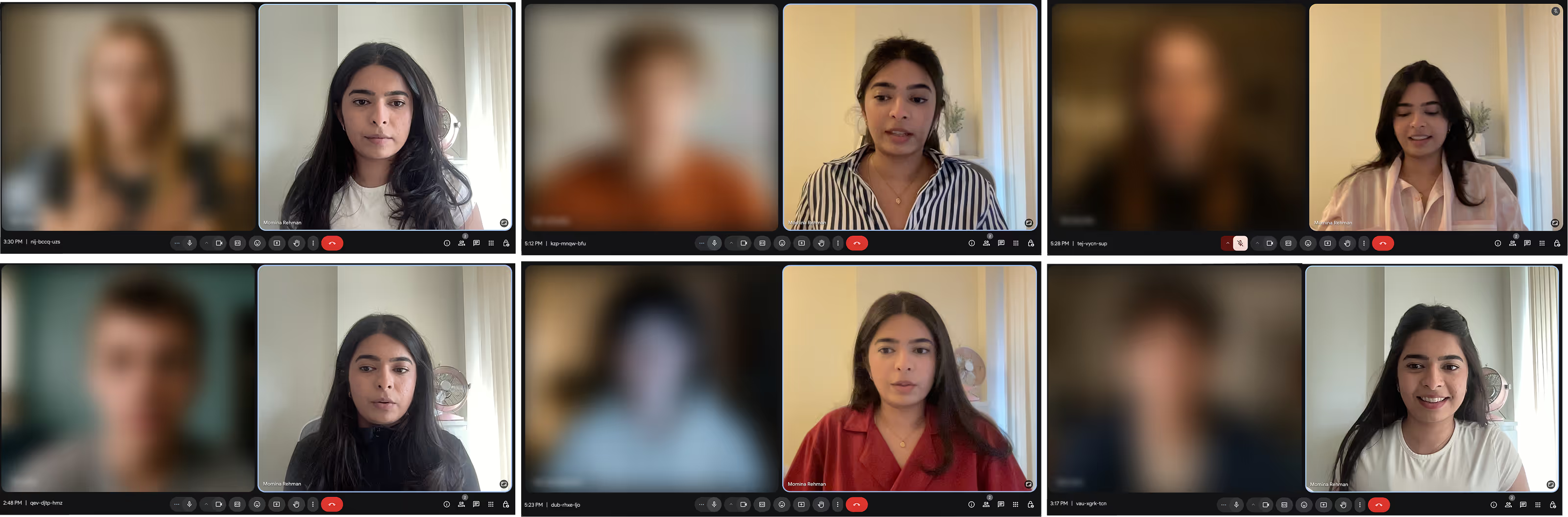

I saw versions of this across interviews:

I struggle to stay consistent with my schedule…sometimes I don’t even have one...it’s hard to figure out which topics I should focus on

-Pugh, 16 years old, O Levels

I get overwhelmed by all the topics and menus…I often skip sessions...

I don’t know where to start next.

-Cole, 17 years old, A Levels

I am a bit lenient when self-assessing, I prefer external feedback to gauge progress…delay in feedback makes me lose interest

-Grace, 16 years old, O Levels

User interview snapshots

This became the brief:

make the next step obvious and rewarding - fast.

make the next step obvious and rewarding - fast.

Research synthesis

What I walked into

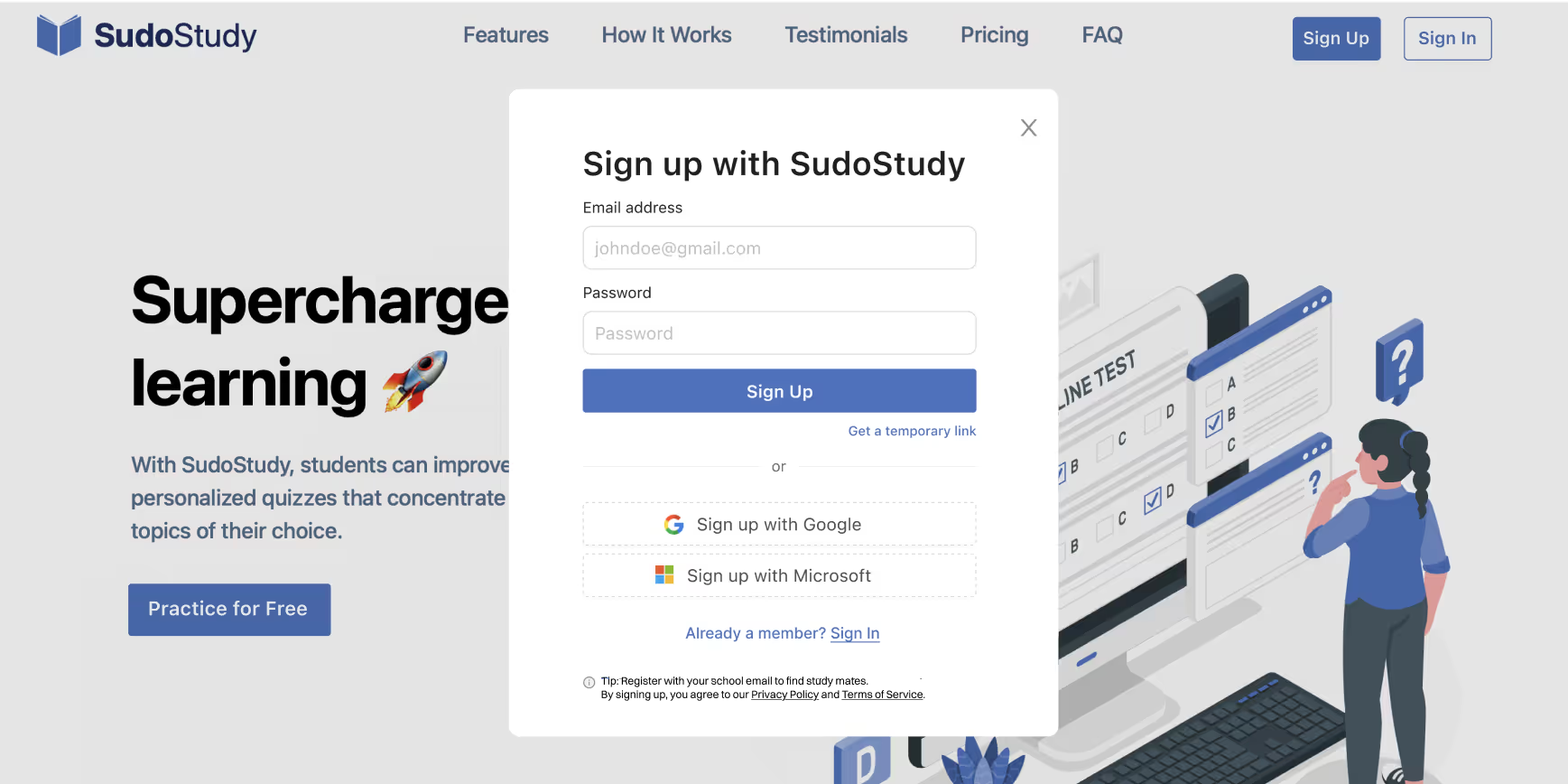

- No onboarding. New users hit a combined auth modal: sign up, sign in, magic link, password, everything at once. It sometimes failed. Rage‑clicks were common. People bounced.

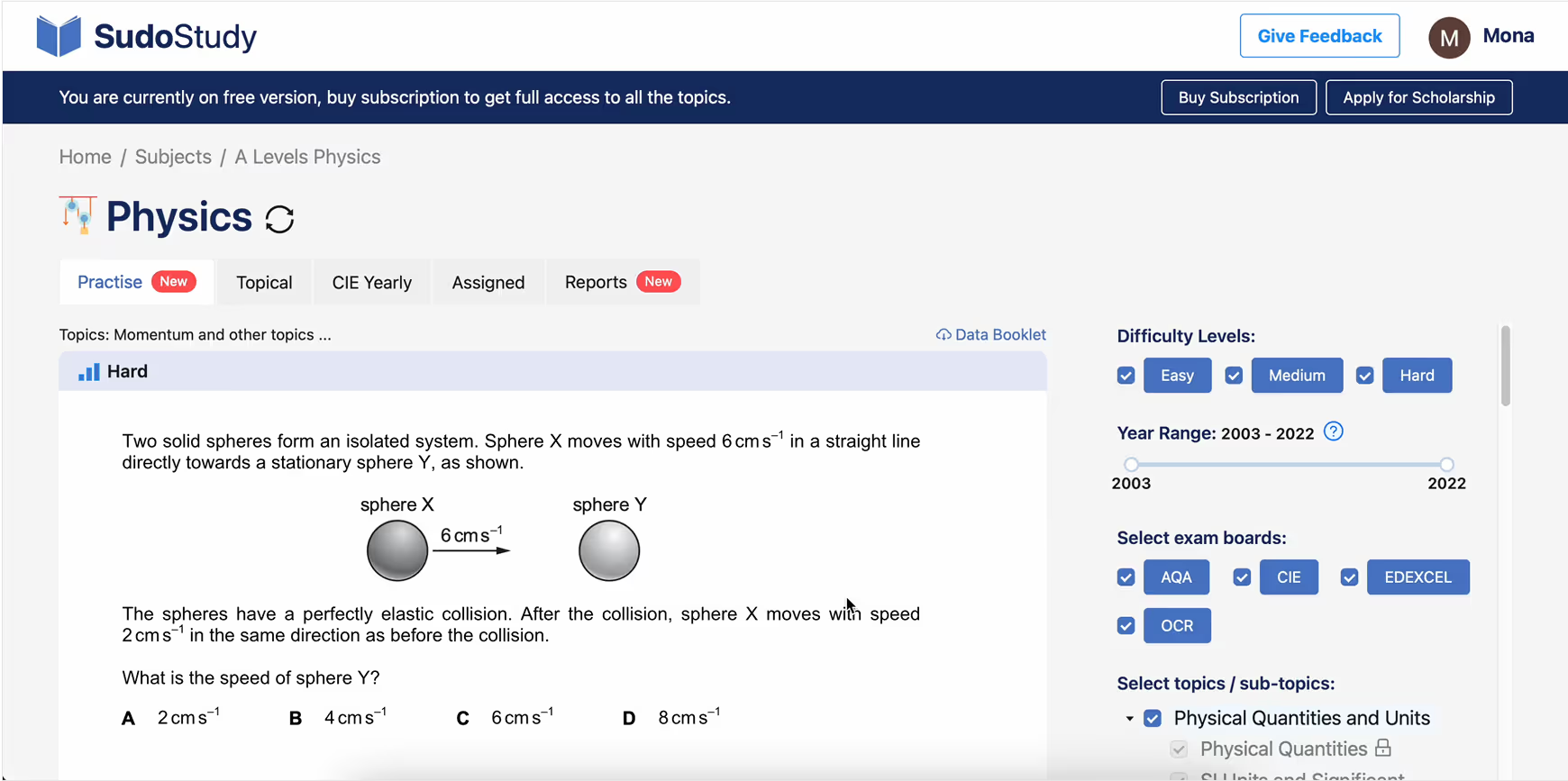

- Practice mode was cluttered. Competing CTAs (“Give feedback,” “Buy subscription”). Redundant choices. No clear exit. Multi‑select topics that didn’t match how students actually study.

- Home didn’t guide the next session. Students couldn’t see strengths, gaps, or coverage. They felt lost between subjects and topics.

- Desktop first, but mobile behavior mattered. The UI didn’t respect quick, focused sessions.

The bet:

If we reduce choice, surface the next best action, and fix first‑run friction, students will activate faster and come back more often.

My role and how we worked

I led end‑to‑end design across research, IA, UI, prototyping, and developer handoff. I joined sprint planning, wrote user stories with acceptance criteria, and partnered tightly with the founder, PMs, and a small dev team. We shipped in two‑week increments, with clear “measure next” hooks in each release. Marketing later used the new visuals in campaigns and on the website.

Evidence that shaped the plan

Session replays & funnels (PostHog):

- Confusing auth modal → immediate drop‑offs after sign‑up/sign‑in

- Unclear navigation in practice and quizzes

- “Too much, too soon” options causing paralysis

- UI elements (buttons, instructional cues) needed to be more explicit

Interviews (20 students):

- Students prefer one topic per session.

- They want external feedback and quick wins.

- They need a sense of progress (how am I doing?) and coverage (what have I touched?).

These insights drove three product bets:

1

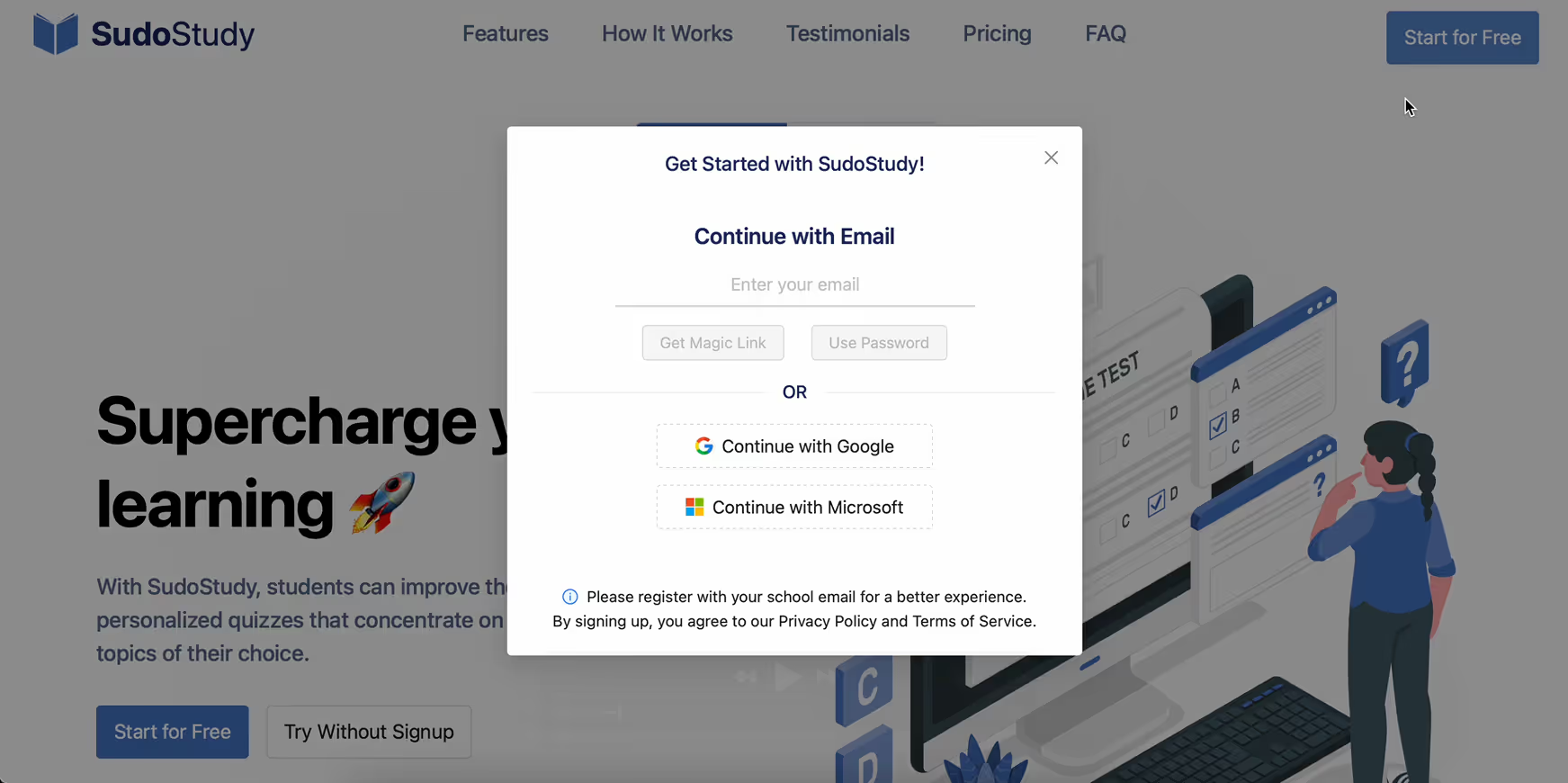

Fix first‑run friction - authentication and onboarding

Problems

New users landed in a catch‑all modal that mixed sign up, sign in, magic link, and password flows. It was unreliable and unclear. Many bounced before seeing value.

Decisions

- Separated authentication paths and hardened error states.

- Introduced a short onboarding that sets intent and momentum: pick subject(s), set a starting point, get a clear “Start practicing” CTA.

- Wrote crisp, action‑first copy.

Trade‑offs

We kept onboarding short to ship fast and measure impact. Deeper personalization moved later.

Results

Immediate drop‑offs fell 31%. More students reached their first real practice session.

Old ‘Getting Started’ modal

New ‘Getting Started’ modal

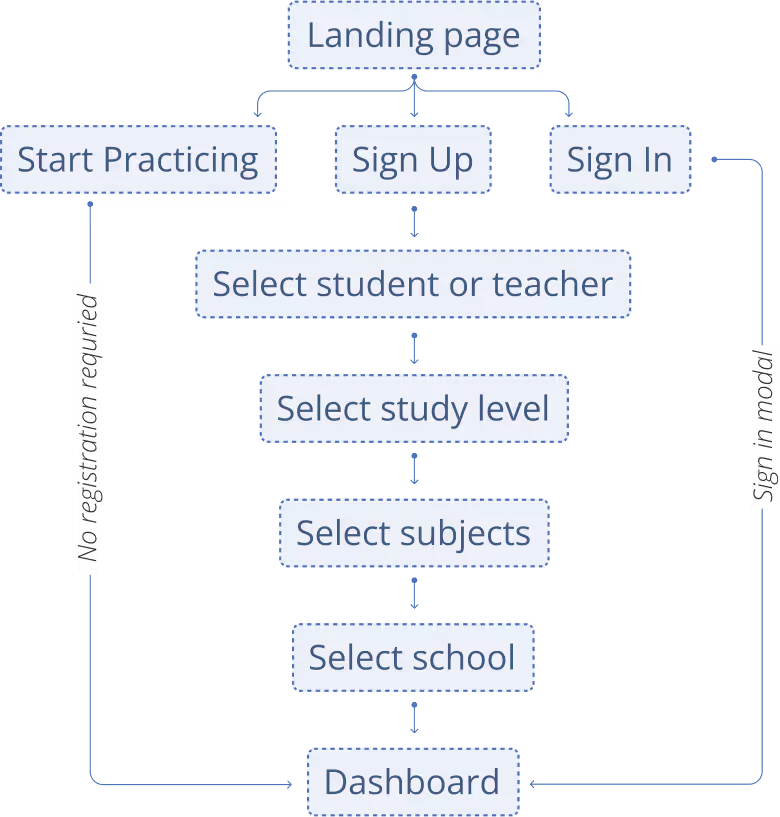

Old onboarding user flow

New onboarding user flow

Video walkthrough of onboarding design prototype

2

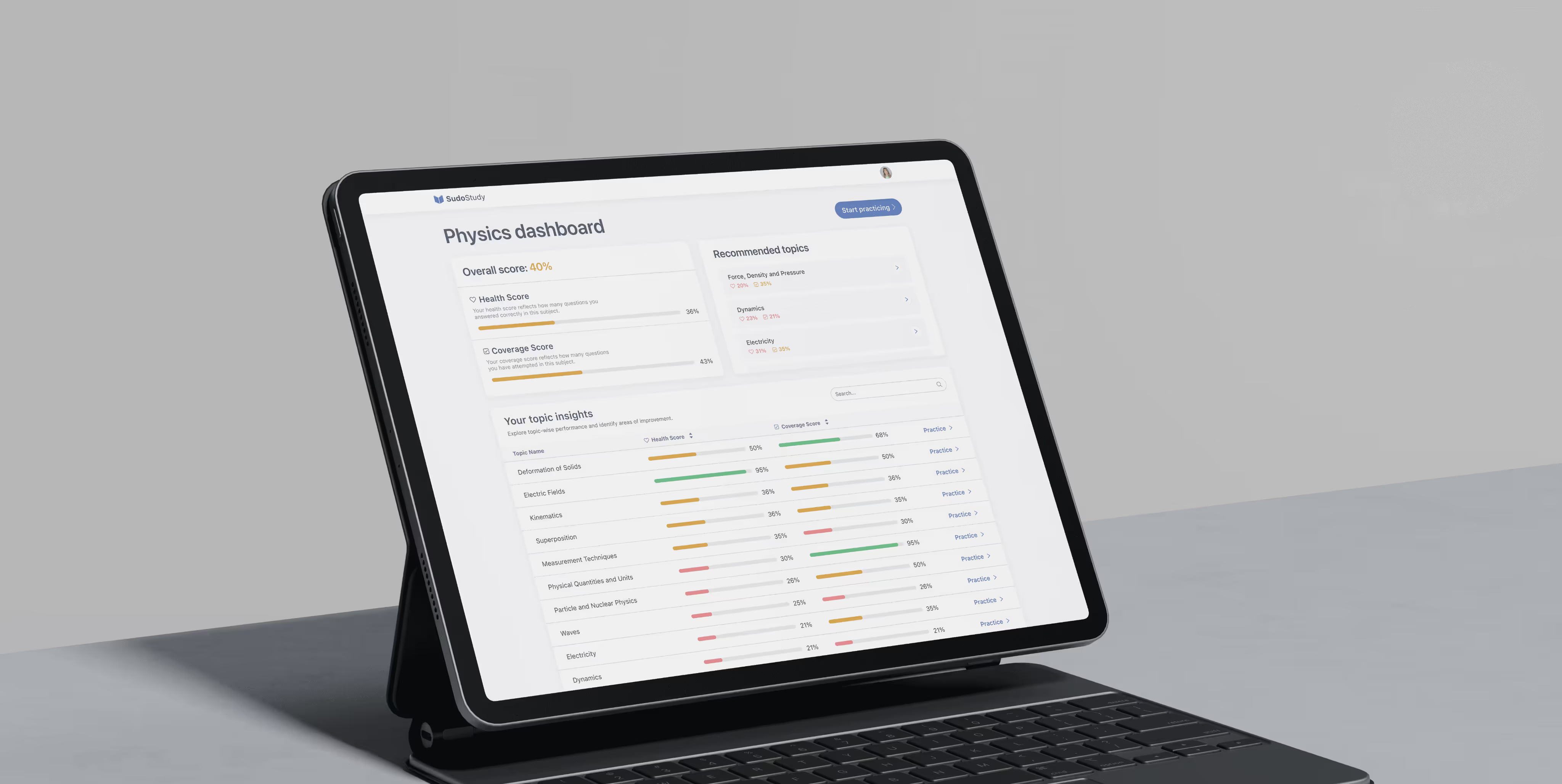

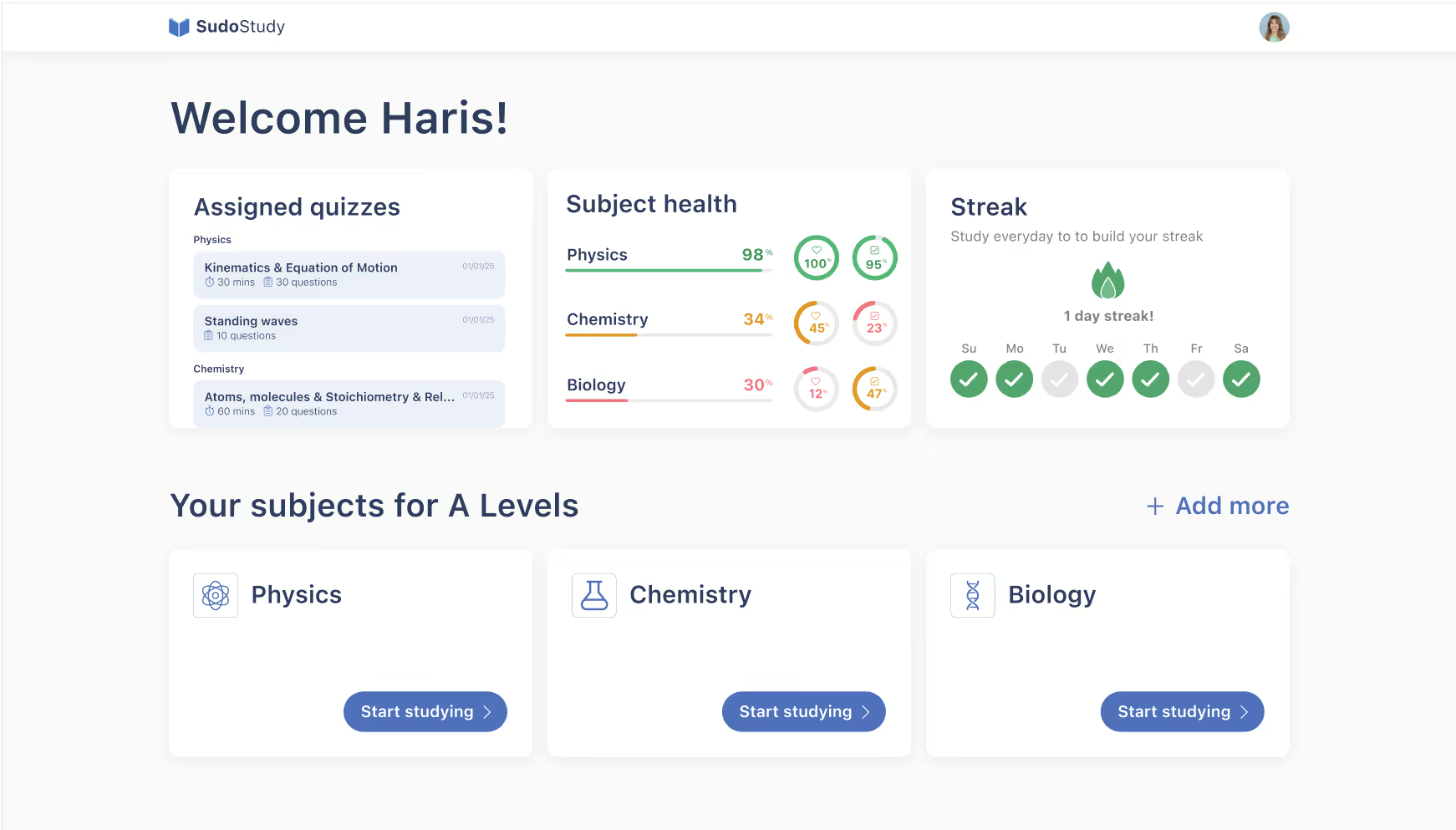

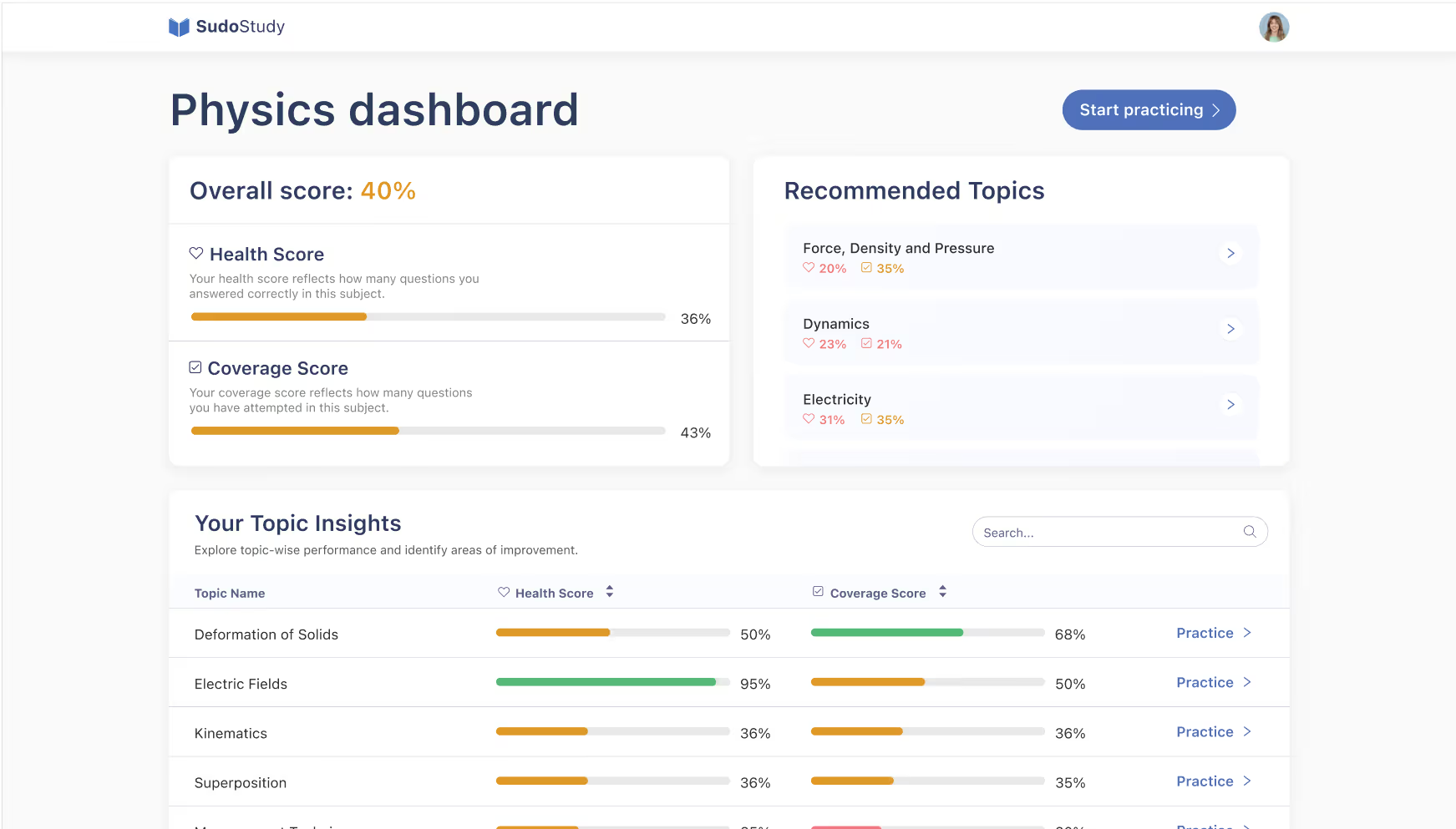

Make “what’s next” obvious - the Home reframe

Problems

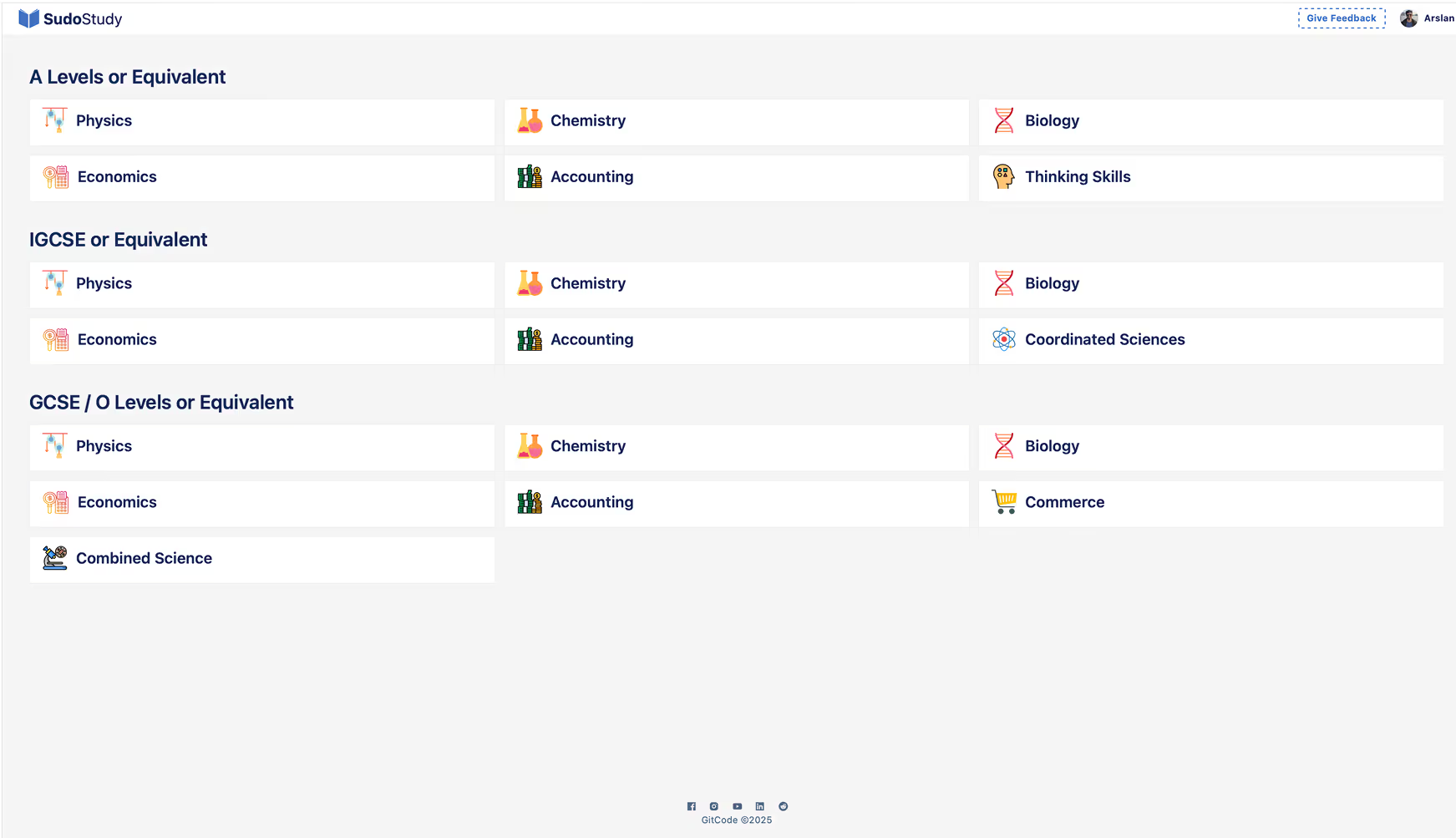

Home didn’t guide the next action. Students couldn’t see progress or gaps.

Decisions

- Added Health Score (how often you answer correctly) and Coverage Score (how much of a topic you’ve touched).

- Surfaced Recommended Topics using those two signals.

- Added a daily streak to encourage frequent, shorter sessions.

Trade‑offs

We deferred advanced recommendation tuning to focus on activation and return usage first.

Results

Students had a clear next step, and weekly users rose from 1,280 to 2,187.

Old ‘Home Dashboard’

New ‘Home Dashboard’

3

Reduce cognitive load - Practice mode, before & after

Problems

- Competing CTAs distracted from the task.

- No obvious exit from a session.

- Multi‑select topics didn’t match real study behavior.

- Redundant options added friction before the first question.

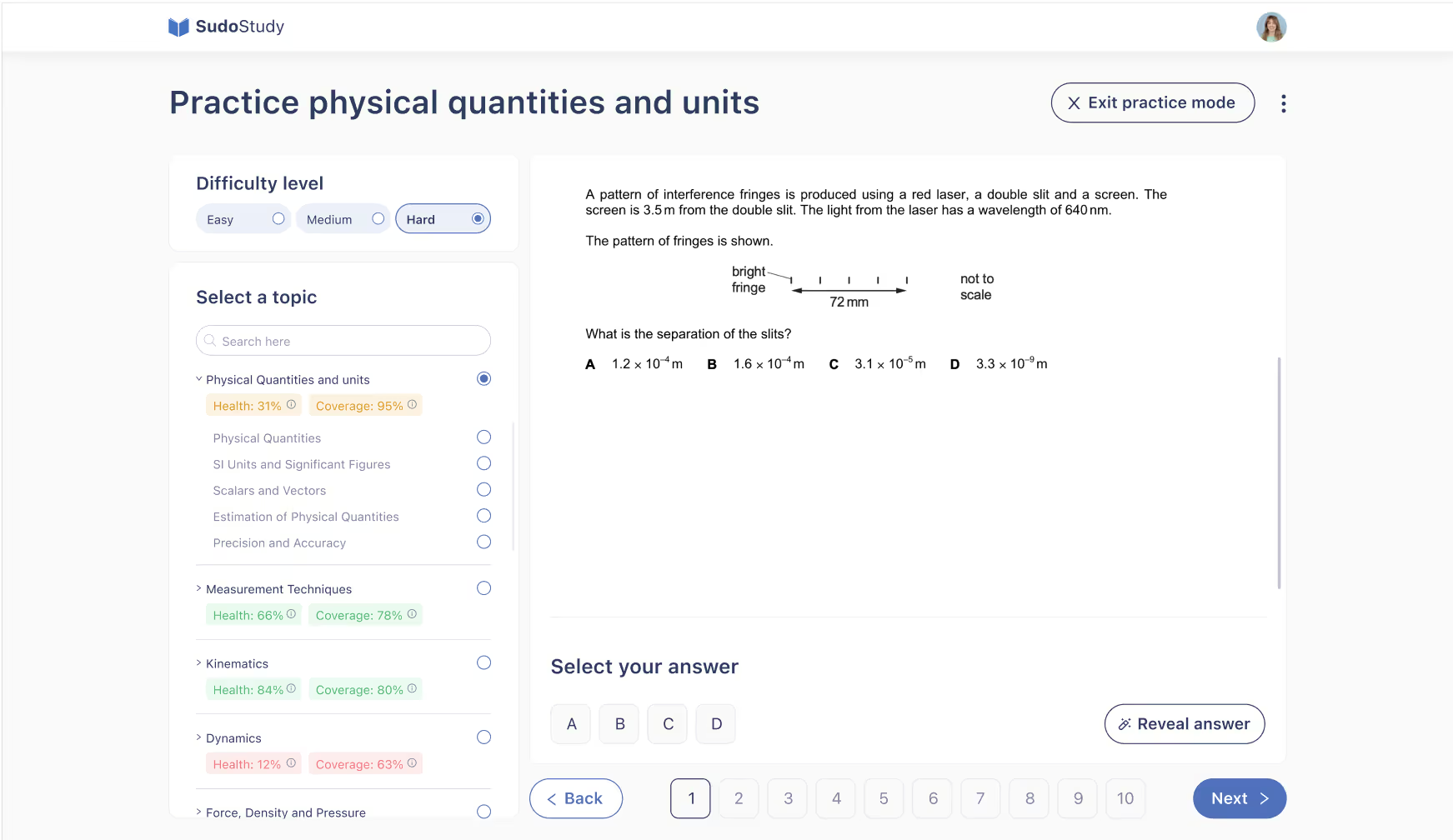

Decisions

- Added a clear ’Exit practice’ control to restore a sense of safety.

- Put difficulty and topic choice first, in a simple left‑to‑right flow.

- Switched topic selection to single‑select (radio) based on interviews: one topic per session.

- Used progressive disclosure. Secondary actions live behind an options menu.

- Kept the question area clean: answer → feedback → next.

Trade‑offs

Single‑select limits “batching,” but it aligns with how students actually work and reduces choice paralysis. We bookmarked multi‑topic practice as a future advanced mode.

Results

Students spent less time deciding and more time practicing, and practice per user rose 27%.

Old ‘Practice Mode’

New ‘Practice Mode’

Old ‘Subject Dashboard’

New ‘Subject Dashboard’

What didn’t work (and what I learned)

The initial subject empty state CTA under‑performed until I swapped a descriptive card for a clear primary button. Simple beats clever when users are anxious to start.

Enter practice mode

Practice your concepts using personalized question recommendations paired with answer explanations - all without the hassle of creating a quiz

Start practicing

Practice your concepts using personalized question recommendations paired with answer explanations - all without the hassle of creating a quiz

Outcomes and measurement

+66.7%

WAU

(from 1,280 to 2,187)

(from 1,280 to 2,187)

+27%

Sessions per user (practice mode usage)

-31%

In first-session

drop-off

drop-off

How I measured

- Post‑release window: ~2–4 weeks, all active users.

- Baseline: the prior 2–4 weeks.

- No major promos or unrelated product changes during this period.

How I work

- Translate user signal into product bets with clear guardrails.

- Ship in small, measurable slices; instrument the next answer into each release.

- Keep copy, layout, and controls brutally simple, especially for first‑run flows.

- Treat design as a team sport: I co‑planned sprints and wrote user stories with acceptance criteria; handoffs were detailed and predictable.

🤔 Reflections

This project reminded me that great UX isn’t just about cleaner screens, it’s about building systems that support users end-to-end.

Grounding every decision in research and real behavior helped turn scattered features into a more focused, usable learning experience.

Even small friction points can pile up, but thoughtful design rooted in user needs makes a measurable difference.

Testimonial

Arslan Arshad

CEO SudoStudy

“I’m thrilled to recommend Momina Rehman for her outstanding contributions as Senior Product Designer at SudoStudy, an online assessment platform that empowers teachers to create, assign, and grade quizzes across A-Level, GCSE, and other exam boards.

From day one, Momina owned the end-to-end design process: she synthesized requirements from founders and stakeholders; conducted over 20 in-depth interviews with teachers and students to map real workflows; and translated insights into detailed wireframes and high-fidelity prototypes in Figma. By leveraging analytics tools like PostHog to identify under-used features, she expertly streamlined the information architecture, removing redundant elements, simplifying complex flows, and ensuring that core functionality was always front and center. The results spoke for themselves. Within a few weeks of rollout, weekly active users on the platform increased by 2x, and conversation rate saw an increase as well.

Beyond the metrics, Momina’s crystal-clear documentation and regular design walkthroughs became essential touchpoints for developers, product managers, and our marketing team, ensuring everyone moved in lockstep. Her blend of user-centered research, analytical rigor, and hands-on collaboration makes her an invaluable asset to any product team.”

From day one, Momina owned the end-to-end design process: she synthesized requirements from founders and stakeholders; conducted over 20 in-depth interviews with teachers and students to map real workflows; and translated insights into detailed wireframes and high-fidelity prototypes in Figma. By leveraging analytics tools like PostHog to identify under-used features, she expertly streamlined the information architecture, removing redundant elements, simplifying complex flows, and ensuring that core functionality was always front and center. The results spoke for themselves. Within a few weeks of rollout, weekly active users on the platform increased by 2x, and conversation rate saw an increase as well.

Beyond the metrics, Momina’s crystal-clear documentation and regular design walkthroughs became essential touchpoints for developers, product managers, and our marketing team, ensuring everyone moved in lockstep. Her blend of user-centered research, analytical rigor, and hands-on collaboration makes her an invaluable asset to any product team.”

Other projects

.avif)

Solving fragmentation with the Unity Design System for UNATION; an event discovery platform

I led the creation of the Unity Design System, redesigned the event creation flow, shipped PWA versions of their apps, and launched a new revenue surface feature called “Experiences.” The result was 2x faster event publishing, +18% in cross-platform user engagement, and 110 “Experiences” sold within weeks of launch.

Read case study

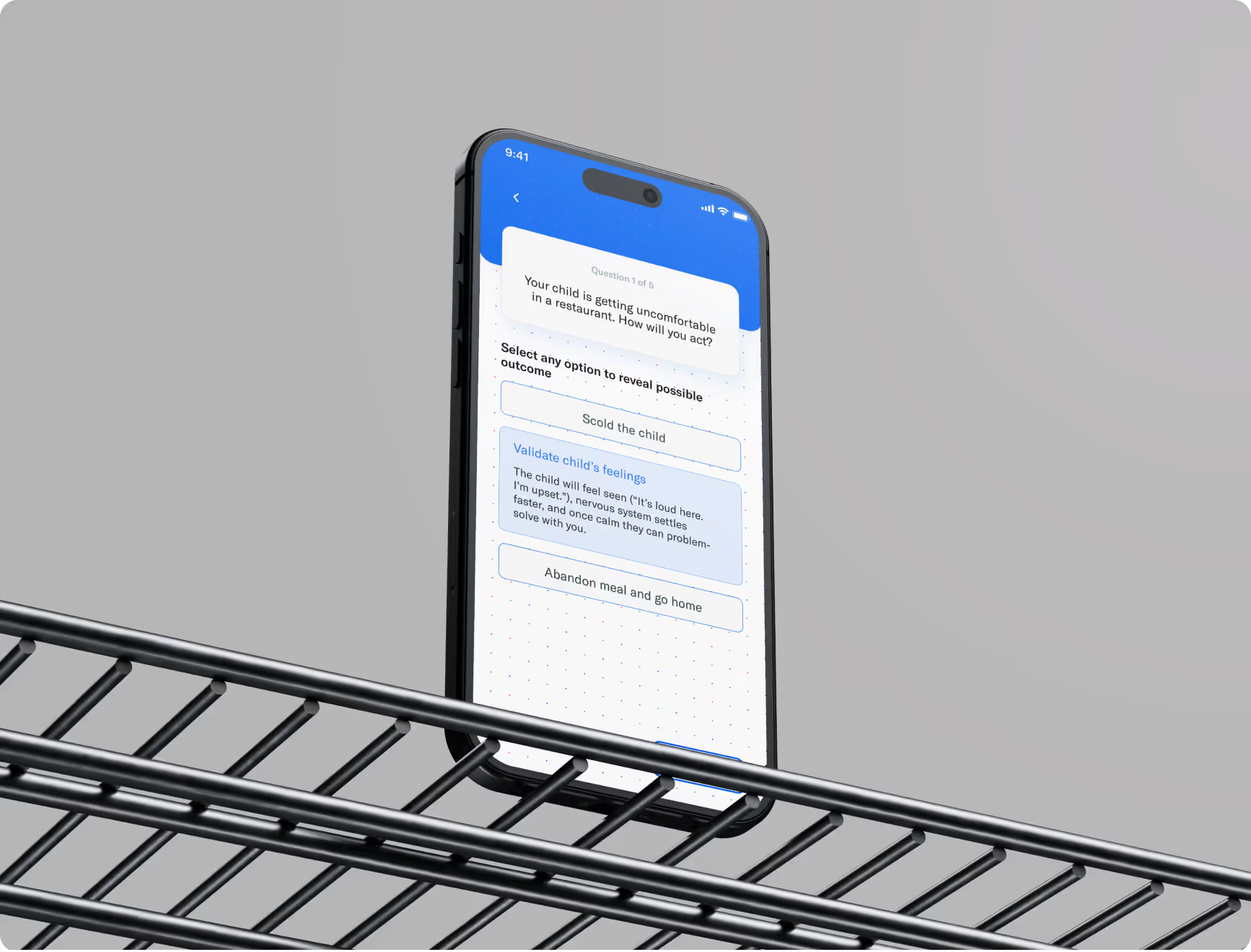

Building emotional intelligence with Parentify through culturally aware design

Parentify is a mobile app that helps South Asian parents build emotional intelligence and improve communication within families. I led UX strategy, research, and design of scenario-based learning, mood check-ins, and curated emotional resources. Community feedback showed strong emotional resonance, with early testers reporting increased self-reflection and deeper parent-child conversations.

Read case study